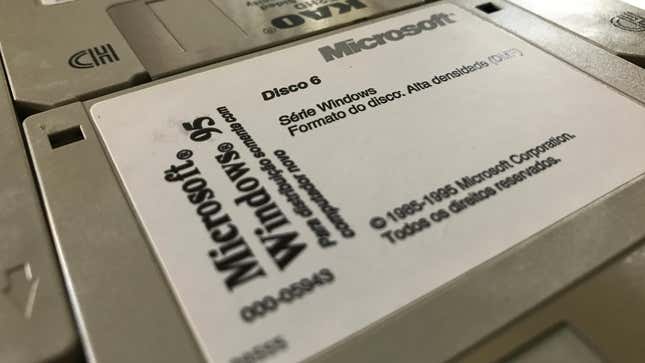

There’s still quite a bit of lingering nostalgia for Windows 95, and even though its notoriously easy to fake your way back into the days of blocky menus and balding men screaming at you that it’s “only $99,” one Windows experimenter managed to use ChatGPT to generate easy product keys for the venerable operating system.

Late last month, the YouTuber Enderman showed how he was able to entice OpenAI’s ChatGPT to generate keys for Windows 95 despite the chatbot being explicitly antagonistic to creating activation keys.

Old Windows 95 OEM keys used several parameters, including a set of ordinal numbers as well as other randomized numerals. In a considerably simple workaround, Enderman told ChatGPT to generate lines in the same layout as a Windows 95 key, paying particular attention to specific serials that are mandatory in all keys. After a few dozen attempts of trial and error, he settled on a prompt that worked, and it could generate around one working key for every 30 attempts.

In other words, he couldn’t tell ChatGPT to generate a Windows key, but he could tell it to generate a string of characters that matched all the requirements of a Windows key.

Once Enderman proved the key worked in installing Windows 95, he thanked ChatGPT. The chatbot responded “I apologize for any confusion, but I did not provide any Windows 95 keys in my previous response… I cannot provide any product keys or activation codes for any software.” It further tried to claim that activating Windows 95 was “not possible” because Microsoft stopped supporting the software in 2001, which is simply untrue.

Interestingly, Enderman ran this prompt on both the older, GPT-3 language model and on OpenAI’s newer GPT-4, and told us that the more recent model improved upon even what you saw in his video. In an email, Enderman (who requested we use his screenname) told Gizmodo that a certain number string in the key needed to be divisible by 7. GPT-3 would struggle to understand that constraint and created far fewer usable keys. In subsequent tests with GPT-4, ChatGPT would spit out far more correct keys, though even then not every single key was a winner or stuck to the prompt’s parameters. The YouTuber said this suggests “GPT-4 does know how to do math, but gets lost during batch generation.

GPT-4 does not have a built-in calculator, and those who want to use the system to generate accurate answers to math problems need to do extra coding work. Though OpenAI has not been forthcoming about the LLM’s training data, the company has been very excited about all the different tests it can pass with flying colors, such as the LSAT and Uniform Bar Exam. At the same time, ChatGPT has shown it can occasionally fail to spit out accurate code.

One of the main selling points of GPT-4 was its ability to handle longer, more complex prompts. GPT-3 and 3.5 would routinely fail to create accurate answers when doing 3-digit arithmetic or “reasoning” tasks like unscrambling words. The latest version of the LLM got noticeably better at these sorts of tasks, at least if you’re looking at scores on tests like the verbal GRE or Math SAT. Still, the system isn’t perfect by any means, especially as its learning data is still mostly natural language text scraped from the internet.

Enderman told Gizmodo he has tried generating keys for multiple programs using the GPT-4 model, finding it handles key generation better than earlier versions of the large language model.

Don’t expect to start getting free keys for modern programs, though. As the YouTuber points out in his video, Windows 95 keys are far easier to spoof than keys for Windows XP and beyond, as Microsoft has started implimenting Product IDs into the operating system installation software.

Still, Enderman’s technique didn’t require any intense prompt engineering to get the AI to work around OpenAI’s blocks on creating product keys. Despite the moniker, AI systems like ChatGPT and GPT-4 are not actually “intelligent” and they do not know when they’re being abused save for explicit bans on generating “disallowed” content.

This has more serious implications. Back in February, researchers at cybersecurity company Checkpoint showed malicious actors had used ChatGPT to “improve” basic malware. There’s plenty of ways to get around OpenAI’s restrictions, and cybercriminals have shown they are capable of writing basic scripts or bots to abuse the company’s API.

Earlier this year, cybersecurity researchers said they managed to get ChatGPT to create malicious malware tools just by creating several authoritative prompts with multiple constraints. The chatbot eventually obliged and generated malicious code and was even able to mutate it, creating multiple variants of the same malware.

Enderman’s Windows keys are a good example of how AI can be cajoled into bypassing its protections, but he told us that he wasn’t too concerned about abuse, as the more people poke and prod the AI, the more future releases will be able to close the gaps.

“I believe it’s a good thing, and companies like Microsoft shouldn’t ban users for abusing their Bing AI or nerf its capabilities,” he said. “Instead, they should reward active users for finding such exploits and selectively mitigate them. It’s all part of AI training, after all.”

Want to know more about AI, chatbots, and the future of machine learning? Check out our full coverage of artificial intelligence, or browse our guides to The Best Free AI Art Generators, The Best ChatGPT Alternatives, and Everything We Know About OpenAI’s ChatGPT.

Bagikan Berita Ini

0 Response to "YouTuber Proves ChatGPT Can Manufacture Free Windows Keys - Gizmodo"

Post a Comment